The set of processes, protocols, and rules involved in managing data, securing data, and generating value from data is termed data management.

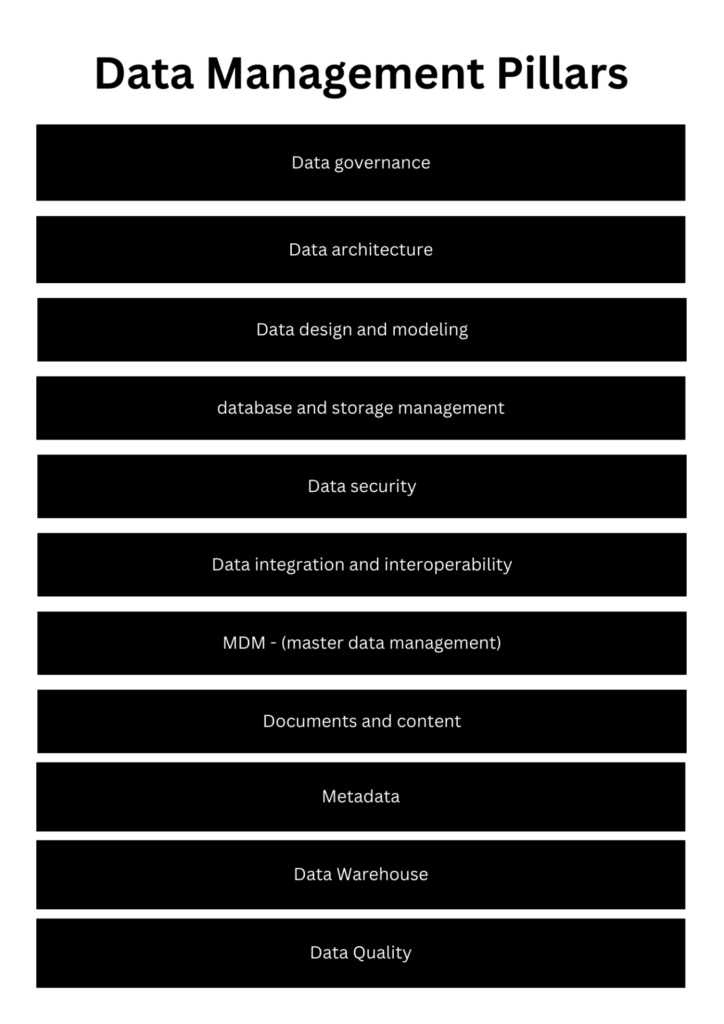

The data management framework needs to be in place to effectively store, manage, access, process, and distribute data across enterprises and over the web.The data management can be divided into 11 key areas:

1) Data governance : |data assets | data trustee| data subject | data steward | data ethics | data customization |

The policies, rules, protocol, and framework that govern the management, transfer, flow, and veracity of data is referred to as data governance, effective data governance is needed in order to ensure veracity, quality, and HQ data storage, access, management, and transfer.

2) Data architecture: |Data architecture | data structure | DFD | PFD | ER| data blueprint | information architecture | are a few sets of processes that come under data architecture purview.

3) Data design and modeling: It refers to the modeling and designing of the data. It consists of logic data model | physical data model | Virtual + H/W based data modeling.

The SOT will be divided into data units which will be done via logical modeling and then the data will be stored filtered and organized in the hardware layer and transferred via the physical layer.

4) database and storage management: |DB maintenance | DB admin | rdbms | instruction db| semi-structure DB | object store | RDBMS | Dbaas | In database and storage management the data will be first stored then sorted, processed and then consumed across multiple presentation formats, the DBMS, RDBMS, DWH, data lakes all kind of structured, semi-structured and object data stores are managed, operated and monitored via database and storage management process.

5) Data security : data access | data encryption | data privacy | data security

All the data needs to be kept secure and encrypted, the data privacy implementation is carried out by defining strict access levels to maintain the data integrity. Data security is ensured by adapting to highly efficient and pragmatic data security policies, standards, and operational procedures, high level data security needs to be achieved in both the virtual and the physical layer.

The data needs to be integrated via ingestion engine or API data sources, the master data management implementation is very vital to keep a well-defined data posture, and an ETL / ELT pipeline is created to integrate data / acquire data and process data in the desired and consumable format.

6) Data integration and interoperability: ETL | ELT | DWH | OLP | OLTP | data movement and data interoperability processes need to be standardized and well defined.

7) MDM – (master data management): the Master data management ensures the main SOT is well managed and maintained, please note that the master data has to be HQ, SOT should be referred to as master data, and the data stores should have master/slave schema or read duplicates as highly reliable backup systems. Both Master/slave deployments or read duplicate deployments are welcomed.

8) Documents and content: document management system | flat file management | record management | file storage, the files and documents will be stored securely by blob, flat file, and object data stores. Data stores can store all media types. Integrate an efficient document management system, the document management system will run over a logical system that will carry out the write / read operation of files.

Semi-structured, structured, and object data can be stored in respective data stores.

9) Metadata: metadata management | metadata | metadata discovery | meta-data publishing | metadata registry | The metadata of the data needs to be managed, discovered, and published, this can be done by abiding by an effective metadata management strategy.

All the data will have meta-data associated with it, hence by abiding by metadata management processes the files are organized and are directly linked with file management, the data can be retrieved via metadata and metadata can be retrieved via data, hence reciprocity between the data and the metadata management is very important to keep metadata and data secure and accessible.

Metadata publishing | Metadata registration | Metadata discovery and multiple other processes that help in storing, access management, and security of metadata, just like the data is managed, metadata management is also part of data management.

10) Data warehouse (BI, PML, data science)

BI | data mining | data workers | data analyst | ML with big data| The ETL interaction| data sources | process automation | ETL | All these processes help in extracting high-value insights from data, The data is visualized with the help of tools like powerBI, data mining activities will be carried out by data teams.

The high quality and valuable data that is considered the SOURCE OF TRUTH can be effectively consumed for business intelligence, predictive analysis, and R&D activities to achieve higher data ROI.

11) Data quality : data discovery | data cleansing | data integrity | data enrichment | data quality assurance | secondary data |

Data quality is must in order to drive data-driven growth, the data sources have to be credible and the right set of data cleansing processes has to be synchronized with data architecture and data governance policies (data quality ) to achieve higher data R.O.I.

The data quality will ensure high data consistency and integrity, (data consistency) and (data integrity) will drive process efficiency and scalability. Data quality management needs to be synchronized with data compliance as the compliance and data governance framework will render higher data-driven growth and will ensure higher data quality.

Diagram

The article above is rendered by integrating outputs of 1 HUMAN AGENT & 3 AI AGENTS, an amalgamation of HGI and AI to serve technology education globally.